Intro

This isn’t going to be your typical MacBook review because there are plenty of those out there and most of them are very good. This review is for people who don’t give two craps how this year’s model compares to last year’s model and instead want to know how this year’s model compares to their crusty old ThickBook Pro from five years ago because that’s the one they’re upgrading from. Cool? Cool.

Without further ado, here’s how the 13″ MacBook Pro with Touch Bar fares against the mighty 15″ MacBook Pro (Early 2011).

Size and Ports

I thought my old 15″ MacBook Pro was a laptop. I was wrong. Not only did it frequently burn my lap from running too hot, it was also heavy and annoying to lug anywhere. In contrast, my new MacBook Pro stays cool and feels light as a feather.

The early 2011 15″ MacBook Pro weighed 5.6 pounds. The new 15″ models are only 4 pounds, and the 13″ inch model is actually weightless. Just kidding. But at only 3.02 pounds, it kinda feels like it! I can carry my 9-month-old son Charlie with one hand and my laptop with the other, so it doesn’t get much more portable than that.

My old laptop had a lot of ports, some of which are mysterious to me (a Kensington lock slot? Really?!). My new one has four: Thunderbolt 3, Thunderbolt 3, Thunderbolt 3 and Thunderbolt 3. Oh, and a headphone jack that’s inexplicably on the opposite side of all previous MacBooks.

So yeah, you’re gonna lose your CD drive, SD card slot, Ethernet port, flimsy old USB 2.0 ports and good ol’ FireWire 800. In exchange, you’re getting four ports that are approximately one zillion times faster at data transfer than what you had. I’d call that a win.

Sure, the loss of MagSafe is a bummer, but being able to plug in the power cable on either side of the computer is really, really convenient.

And honestly, I’m not upset about the adapter situation because the ports on this old MacBook are mostly obsolete, and because I really only need an SD card adapter, and a USB-A adapter for my Time Machine backup.

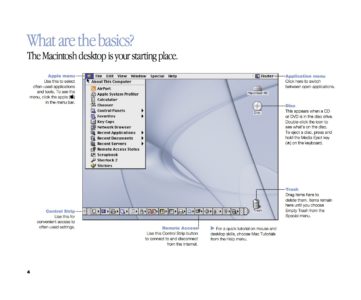

Display

The 2011 Pro models were the last to have non-Retina displays. If by chance you’ve never seen a Retina display in person yet: it alone is worth upgrading your computer. It’s like taking Claritin, or getting glasses for the first time. Everything is so crisp and clear that you’ll never be able to go back to blurland.

Note, however, that in order to enjoy the full Retina experience on the 2016 MacBooks, you’ll need to change the display’s default scaling.

In addition to being ultra clear, the display is also significantly brighter than the MacBooks of yore and can display many more colors (in technical terms: it has a wider color gamut). It really is beautiful!

Right below the display you’ll see the familiar “MacBook Pro” label in silver lettering except now it’s in the new system font, San Francisco, which looks much nicer in my opinion.

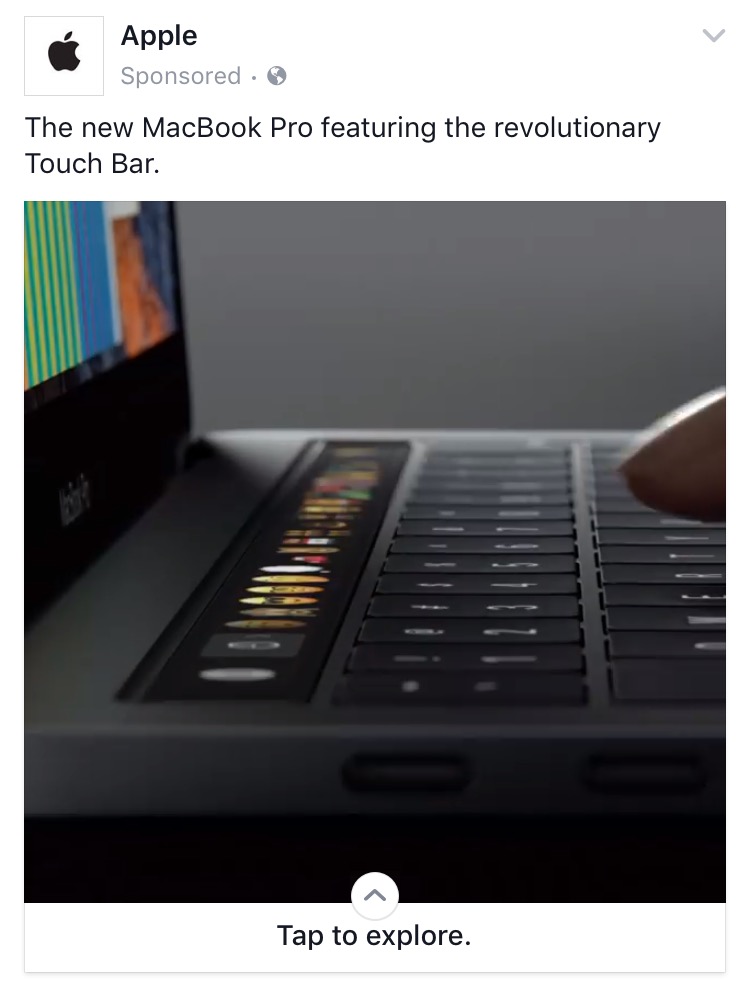

The Touch Bar

I never learned the keyboard shortcut for comparing an edited photo to its original version in Photos. I still don’t know what it is, and probably never will because there’s a button for that on the Touch Bar now.

I like the Touch Bar. I think it’s fun, and I enjoy customizing it in any app that will let me. Like adding stickers to the outside of a laptop or changing its desktop picture, choosing which functions to add to the Touch Bar makes my MacBook feel more personal to me.

I personally know several people who struggle to use computers but who always buy MacBook Pros because they understand that they’re the nicest in the line-up. These users rarely look through an application’s menus, and if they do, they’re afraid to try stuff. I think the Touch Bar helps surface some useful things for both professionals and people who aren’t particularly “good with computers.”

Keyboard and Trackpad

Once you get used to it, the keyboard on this thing will make your old MacBook Pro’s keyboard feel mushy.

The new keyboard is low and tight and snappy. That’s the only way I know to describe it. It feels really good to me, and I don’t like going back to the old one.

Some reviewers have noted (both positively and negatively) that the new keyboard seems louder. That’s true, but you can also type quietly on it. My baby wakes up if he hears a fly buzzing across the room and I was able to type sitting next to his bed without waking him…so there’s that.

The trackpad is roughly as wide as my iPhone 6s is tall (in its case!) which is to say it’s fairly ginormous. Unlike your old MacBook’s trackpad with a physical button at the bottom, you can press down anywhere on this thing. I opened my 15″ Pro the other day to find a file and was super frustrated that I couldn’t press down anywhere…and I’m normally a tap-to-click person! In other words, this trackpad is rad and I love it.

Speed

Despite moving from quad-core to dual-core, this computer can run circles around my old one. It used to take 20 minutes to copy Xcode into my Applications folder on the 2011 MacBook Pro with a 500GB spinning platter hard drive. My new 13″ Pro with Touch Bar has a 1TB SSD and I don’t even remember seeing a loading indicator when I moved Xcode. It’s fast. Everything is fast. Launching applications, compiling code, performing Spotlight and Mail searches, saving and moving files…it’s all fast.

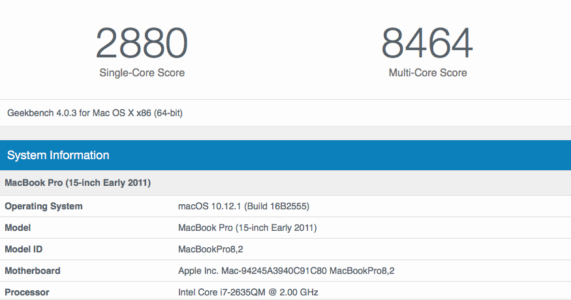

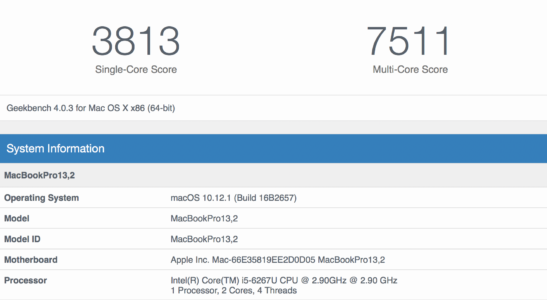

I ran Geekbench 4 on both machines and here were the results (click or tap to enlarge):

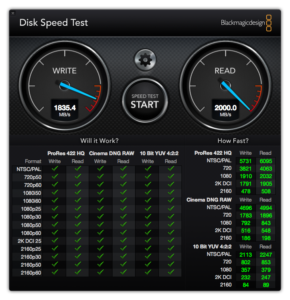

I also ran Blackmagic Disk Speed Test, which is where you can really see a massive difference:

I had upgraded my 15″ Pro from 4GB to 8GB of RAM which is something you can’t really do on these new machines. They’re locked down tight, with an abysmal 1/10 repairability score on iFixit. Still, my new MacBook has 16GB of RAM. Even with Xcode, Simulator, Photoshop, Affinity Designer, Safari, Photos and iTunes open, I still haven’t come close to running out of memory.

Conclusion

If, like me, you’ve been waiting for a very long time to upgrade your MacBook Pro, the new models with Touch Bar are an incredibly vast improvement. And if you also enjoy being an early adopter like I do, you’re going to love playing around with the Touch Bar. I see no compelling reason to hold out for the next iteration of these machines. It’s unlikely that Apple will bring back any legacy ports, and besides the usual speed bump and the possibility of an e-ink keyboard, I’m not sure what else might change (other than the price, which will hopefully drop a bit). In other words, if you’re holding on to a four- to six-year-old machine, now’s the time to open your wallet and get yourself a great new laptop!