I thought it might benefit other beginners if I wrote up an overview of how I went about building LiveRotate. (Spoiler alert: there was a lot of Googling involved!)

Starting the Project

When I began, I didn’t have the foggiest idea how PhotoKit worked, and I had all but forgotten how to use collection views, which help you display things in a grid. So, I turned to Apple to see if they had a sample project for the Photos framework and luckily, they do. It has even been updated to “illustrate the use of LivePhoto APIs.” Right on! ?

I then translated almost the entire thing, line by line, into Swift. I’m not joking. I needed the code for the collection view, for displaying a Live Photo with a badge, and for caching thumbnails as you scroll, and that was honestly the bulk of the project (if anybody needs any of that code in Swift, just let me know!). As I translated the code, I learned what each piece did, so that I wouldn’t just be blindly copying things without building up my understanding.

Handling Rotation

Deciding how to rotate the photos was confusing at first because there are two ways you can do it. There are rotation flags that determine how a photo is displayed on a device (but that flag may not be respected by all programs/devices). Or, I could “physically” rotate the bits using some kind of transform. Option B seemed like the right way to go, so I set about learning two new frameworks: Core Graphics for the JPEG part of the Live Photo and AVFoundation for the Quicktime Movie part.

Rotating Photos

There are three types of image-related classes in iOS: UIImage, CGImage, and CIImage. For a beginner, that was SUPER CONFUSING (and still sort of is). Some more searching led me to a category for rotating CIImages by 90 degrees. The Swift equivalent of an Objective C category is an extension. So, I translated that code as follows:

extension CIImage {

func imageRotatedByRadians(radians: CGFloat, imageOrientation: UIImageOrientation) -> CIImage {

let finalRadians = -radians

var image = self

let rotation = CGAffineTransformMakeRotation(finalRadians)

let transformFilter = CIFilter(name: "CIAffineTransform")

transformFilter!.setValue(image, forKey: "inputImage")

transformFilter!.setValue(NSValue(CGAffineTransform: rotation), forKey: "inputTransform")

image = transformFilter!.valueForKey("outputImage") as! CIImage

let extent:CGRect = image.extent

let translation = CGAffineTransformMakeTranslation(-extent.origin.x, -extent.origin.y)

transformFilter!.setValue(image, forKey: "inputImage")

transformFilter!.setValue(NSValue(CGAffineTransform: translation), forKey: "inputTransform")

image = transformFilter!.valueForKey("outputImage") as! CIImage

return image

}

Here’s an overview of the photo rotation steps:

- Request the photo data using PHAssetResourceManager

- Create a CIImage from the data and use the extension to rotate it

- Add appropriate metadata (more on this later), convert the resulting image to a JPEG and save it to a temporary location

Rotating Videos

Rotating the video portion of the Live Photo turned out to be much, much trickier. This Technical Q&A from Apple describes which methods actually rotate the buffers and which only set a rotation flag. In order to rotate the video, I needed to use an AVExportSession and apply a transform.

There are 4 orientations that a photo or video may be captured in. I made this convenience method to take the video’s original transform and return information about it.

func orientationFromTransform(t: CGAffineTransform) -> (orientation: String, isPortrait: Bool) {

var assetOrientation = "Up"

var isPortrait = false

if t.a == 0 && t.b == 1.0 && t.c == -1.0 && t.d == 0 {

assetOrientation = "Right"

isPortrait = true

} else if t.a == 0 && t.b == -1.0 && t.c == 1.0 && t.d == 0 {

assetOrientation = "Left"

isPortrait = true

} else if t.a == 1.0 && t.b == 0 && t.c == 0 && t.d == 1.0 {

assetOrientation = "Up"

} else if t.a == -1.0 && t.b == 0 && t.c == 0 && t.d == -1.0 {

assetOrientation = "Down"

}

return (assetOrientation, isPortrait)

}

Each of those 4 orientations could then be potentially rotated 3 different ways: 90 degrees, -90 degrees, and 180 degrees. When you rotate the video, you rotate it around its origin point, which can potentially move the video out of the frame. Therefore you have to apply a translation to get it back to where it’s supposed to be. Derek Lucas (@derekplucas) got me started by creating a Playground that rotated videos on the Mac. I took his translation values and had to tweak them, via trial and error, to get it to work on iOS. Here’s just a small sample of what that hot mess looks like:

var adjustY = videoSize.width

var adjustX = CGFloat(0)

if (radians == CGFloat(-M_PI_2)) {

if orientation == "Right" || orientation == "Up" {

adjustX = videoSize.height

adjustY = 0

} else if orientation == "Left" {

adjustX = videoSize.width

adjustY = -1 * videoSize.width / 4

} else {

adjustX = videoSize.width

adjustY = -1 * videoSize.height / 4

}

}

Once rotated, I saved the video to a temporary file.

Live Photo Metadata

You can’t just throw any two photos and videos together and make a Live Photo without doing a little extra work. I found this project by genadyo on GitHub that shows what sort of metadata must be written into the photo and video files in order for them to be paired up correctly.

Basically, you have to do 5 things:

- Create an identifier of some kind, assign it to the key kFigAppleMakerNote_AssetIdentifier (which is “17”) in a new dictionary and set that dictionary as the kCGImagePropertyMakerAppleDictionary for your JPEG file.

- Create an

AVMetaDataItem where the key is “com.apple.quicktime.content.identifier” and the value is the identifier you created in the first step.

- Create an

AVMetaDataItem where the key is “com.apple.quicktime.still-image-time” and the value is 0. For some reason, this is required in order for iOS to recognize it as a true Live Photo.

- Use

AVAssetWriter to re-save the video you made using AVExportSession, this time writing in the appropriate metadata. Of course, if you aren’t rotating the video, you could just use AVAssetWriter from start to finish.

- Save both the photo and the video to Photos like so (where “fileURLs” is an array containing the two temporary URLs for the photo and video):

PHPhotoLibrary.sharedPhotoLibrary().performChanges({

let request = PHAssetCreationRequest.creationRequestForAsset()

request.addResourceWithType(PHAssetResourceType.Photo, fileURL: fileURLs.first! as NSURL, options: photoOptions)

request.addResourceWithType(PHAssetResourceType.PairedVideo, fileURL: fileURLs.last! as NSURL, options: videoOptions)

}

Conclusion

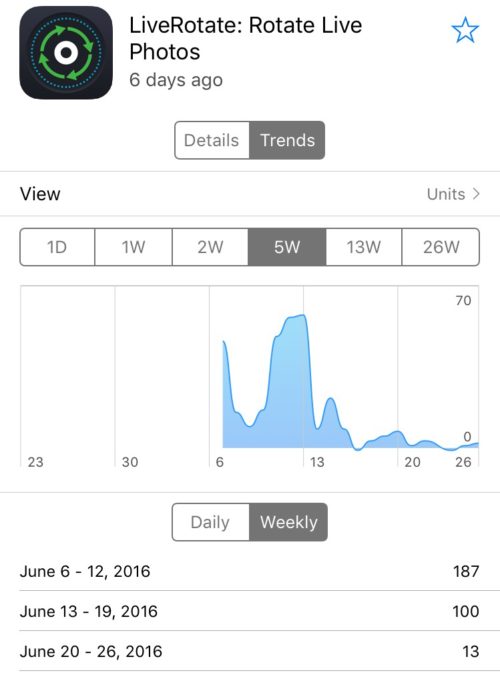

I started LiveRotate on April 27 and finished it on June 6, so it took just a little over a month to make. I’ve had some good suggestions for improvements to the app and hope to implement those soon. For now, though, my brain can finally break free from “obsessive coding” mode and focus on important things like catching up on household chores and cooking some real food! ?

Edit: 4:35 pm CDT

I forgot to add that I created the app’s icon in Photoshop CS6, and translated it into German, Spanish, Italian and Russian via a hilarious process of changing my phone’s language, opening up apps that had the words/phrases I needed, and screenshotting them. I know—I’m a dang thief!